NMEA 2000 bandwidth, Garmin & Furuno issues?

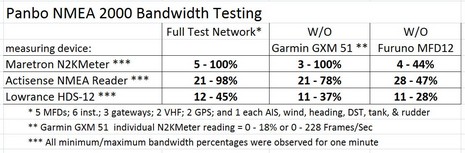

Maybe you thought I was off drilling multiple holes in Gizmo’s bottom — and I was! — but I’ve also been toiling away at the lab’s test NMEA 2000 network. You see, I’d heard that Garmin’s GXM 51 XM Weather sensor might be a bandwidth hog, and also that Furuno’s NN3D MFDs generate an inordinate amount of network traffic. There seems to be some truth to both accusations, but don’t panic! (And you N2K naysayers, please save your snarking until you hear the whole story.) Yes, as you can see on the table above, removing either of these devices reduced bandwidth use of a fairly large network significantly. But even with both devices live on the network, I didn’t see any data problems, and am pretty sure these Garmin and Furuno issues will only cause real issues on very large networks. Let me break down the testing and what I think I’ve learned…

As you can see in the table, I’ve got three ways of measuring the percentage of N2K bandwidth in use at any given moment, and their measurements are all different! But at least they’re different in a consistent way. Maretron’s N2KMeter, though otherwise a very powerful diagnostic tool, was probably the worst for this task because it seems to sample net traffic at a very high rate and N2K networks seem to have sharp highs (and lows) of bandwidth usage (that don’t cause any problems). Actisense’s NMEA Reader, attached to the network via a NGT-1 Gateway, seems to sample bandwidth percentage over a slightly longer period, and hence didn’t generate the extremes N2KMeter did over 60 seconds of observation. And the Lowrance HDS, which measures bandwidth use as part of its diagnostics, seems to measure over an even longer period, and also to come up with consistently lower numbers. The Simrad NSE has the same ability and also seems to come up with low numbers relative to others.

Despite all this flakiness, the numbers do tell a story. Apparently the Garmain GXM 51 can use nearly 20% of the bandwidth at instances. The N2KMeter can look at individual bus traffic percentage, as well as frames per second, and the GSM 51 is the consistent highliner on my network with momentary scores like the 18.7% seen below, which seems to equate to 228 frames/sec. But, again, don’t panic. It’s obviously hard to say what its true average frame rate is (due to measuring inconsistencies), but its not all that much. And, by way of comparison, I measured the following maximum frame rates over one minute: Airmar PB200, 77; Avia Onboard simulating dual engines, wind, GPS, & more via Actisense NGT-1, 122; Garmin GMI 10 (every device outputs something), 3. At any rate, why Garmin chose to put XM Weather on NMEA 2000, and what it can do for you, is another story I hope to tell soon.

The Furuno MFD12 is a very different story, and, in fact, may be old news. (I don’t have the latest software loaded, but if fixed already, I trust Furuno Tech will let us know.) As seen on the N2KMeter, the MFD12 looks like a normal display with little output, but apparently it’s regularly and rapidly requesting ID info from all the other devices on the network, and you can see in the table all the traffic that generates. What it’s doing, I’m told, is not illegal, but it’s meant for problem solving, not constant use. I can also see the problem in NMEA Reader, which lists all PGNS together, unlike N2KAnalyzer, which shows them by device. PGN 126208, Request Group Function, shows up a lot when the MFD12 is powered on. (But N2KAnalyzer shows a device’s node address, which is how I knew that “MAC” 11 below is the GXM 51, while NMEA Reader is dim about specific devices; for the time being, sigh, you need both to have a maximum view of what’s going on.)

So there you go, some geek adventures into the NMEA 2000 nitty gritty. Let me emphasize again that none of this will mean beans on most boats using N2K. But it could on some, and maybe that’s why some of you may need to know about it, and why the manufacturers have to be careful.

I’ve said it before, N2K will run out of steam, before its really gets out of the station. Add distributed switching , monitoring, dc systems etc, notonly will we rapidly approach the 254 limt, we will have reliabilty issues all over the place. Witness Furunos nonsense with daisy chaining

doom I tell you doom…doom Dave

Which services did you try with the GXM 51? XM satellite radio? The specs for the Garmin GDL 69A list the downlink data rate as 38.4K bits per second. XM rates vary from 4 to 64 kbps (I can’t vouch for this).

The messages have priorities. The GXM 51 uses the proprietary PGN to transmit XM data. It uses the lowest or next to lowest priority possible. PGN such as GNSS data or engine dynamic will overrule the proprietary data.

So if the GPS has a GNSS data packet to send and the GXM 51 has some XM data to send, the GNSS data will be transmitted and not XM data. The only data that can be lost is data of equal or lower priority.

Ben, I can send you more on this if you want.

Norse, the test GXM 51 has a full Master Mariner subscription, so it’s getting all the weather data possible. It also has an XM Audio subscription, but the audio signal travels over its own cable, not over N2K.

Jake, I do understand N2K prioritization a bit, and should have mentioned it as one of the reasons that bandwidth could appear fully used for instances without any apparent data problems. Are you saying that the GXM 51 could never cause a problem because of the low priority of its messages?

Dave, have you considered an “N2K Doom” tattoo?

It appears that N2K boats should be thinking about data loading as much as they think about power loading. Just adding more devices could get us in trouble. No surprise since 250Kb is not much bandwidth.

But to do any meaningful load planning, we really need a better understanding of how the N2K network works. Perhaps an anonymous techie from Maretron or one of the mfgs can help answer some of these questions.

1) Where can we find out the size and priority of each PGN?

2) How often does a given device transmit each PGN?

3) Since apparently PGNs have priorities, how are they factored into the network performance? Does a device look at network load before deciding to send a PGN of a given priority? How do these priorities get applied in practice?

4) What happens when the network maxes out? Due to error recovery, some networks effectively lose bandwidth as they become overloaded. What is N2K’s failure mode?

And just for Ben:

5) Your network had 5 MFDS, presumably from 5 different mfgs. Are the non-Furuno MFDs just displays, they don’t output any data, bridge any data?

6) Given what you know about #1-#4 above (presumably more than most of us) what did you expected to see in terms of network load?

I’ll get to your questions later, Russ, as I’m about to hit the road. But did want to report that the NMEA’s Steve Spitzer called to say that these particular issues were discussed recently at a technical meeting, that both manufacturers agreed to make changes that would reduce their bandwidth use, but that even as is they only caused issues on very large N2K systems.

Hi Ben,

First thing – the Actisense NMEA Reader’s “Rx bandwidth” value being shown indicates how much load there is on the serial port being monitored. This is not the same as the NMEA 2000 network bandwidth, but instead is purely how much of the serial port’s bandwidth is in use.

The default Actisense NGT-1 serial PC link baud rate is 115.2 Kbaud. At that speed, the displayed “Rx bandwidth” on NMEA Reader is approximately double the actual NMEA 2000 message network bandwidth. When the NGT-1 is using a baud rate of 230.4 Kbaud, then the “Rx bandwidth” value will be approximately equal to the NMEA 2000 network bandwidth.

The NGT-1 does actually monitor the true NMEA 2000 bandwidth, and this discussion has proven that displaying it simply alongside the “Rx bandwidth” on NMEA Reader would be a valuable feature – I’ll add it to the next release.

To help solidify what Jake mentioned, Manufacturer Proprietary PGN messages have the lowest possible priority on the NMEA 2000 bus, so regardless of how many are sent by a single node, they can only ever block other Proprietary messages. All standard PGN’s have a higher priority and will get through long before these Proprietary messages are allowed on the bus.

The NMEA 2000 / CAN priority system is wrapped up in the lowest hardware level and is automatic without causing any loss of bandwidth. That means, unlike Ethernet (which can loose a large part of its possible bandwidth with message collisions), CAN bus network can get very close to 100% true usage with zero message collisions (i.e. no loss of bandwidth).

As long as manufacturer’s are intelligent with their network use and do not generate unnecessary requests for information (which the NMEA is really trying to reduce – and it’s getting better, slowly), even the largest NMEA 2000 networks (50 nodes strong) should be able to operate very well.

Is it na�ve of me to believe that a clever developer could write a script to act as a user configurable domain controller that managed and buffered network traffic?

What is the bandwidth of a standard NMEA-2000 network in MB/s (if it can be compared to Ethernet)?

I have the Garmin weather receiver and if the data that it downloads from XM is anywhere near to saturating the N2K network then that says something. Downloading from the XM satellite is not exactly fast, it takes about 15 minutes to get data that takes seconds over my broadband ethernet — while my wife is watching videos over the same link…

It does feel a bit like N2K is reinventing the wheel. You could probably go through all of the old RFCs for Ethernet to solve some of these issues (subnets?)

Most of the advantages I see posted here for N2K over Ethernet are theoretical — at 10/ or 100/mbps there is really no such thing as a packet collision on any boat less complex than an aircraft carrier.

No, George, the Garmin GXM 51 is not saturating any NMEA 2000 network I know of. It does use more bandwidth than most devices, but it uses it in a polite way, waiting to transmit while more important data passes through. I saw that in my testing, and Andy explained it well in his comment above.

Nor is NMEA 2000 reinventing the wheel. It’s just that it’s based on CANbus, the standard for vehicle sensor networking, instead of Ethernet, which is more familiar to folks from the computing world. The way N2K is used on boats is, in fact, more analogous to a truck than to an office. Where more complex processors talk to each other on boats, like radar to MFD, Ethernet is used. But, unfortunately, almost all marine Ethernet data is proprietary, so far.

The slowness of your XM Weather system to gather data, by the way, has more to due with restricted satellite bandwidth than how it gets from the sensor to your screen.

Russ, Every device, MFDs included, outputs at least some network utility PGNs. All the MFDs are also capable of some data bridging, or even original content (like Set and Drift calculated by Raymarine E Series), I think. But I haven’t messed with those features much, and they are often poorly documented.

I really did not have a particular expectation about network broadband, and I think we’re seeing in these comments that many of us, me included, still don’t have our heads properly wrapped around the concept as it pertains to CANbus. Obviously the measuring devices I have are just a flaky glimpse at what’s going on (and I misunderstood one completely). It seems like I could add more devices to this network and, if they behave nicely, they wouldn’t really cause much average bandwidth use. On the other hand, we’re seeing that a single device can cause a lot of bandwidth use without even outputting data (but that’s being fixed).

It’s good to know more about how N2K uses it’s bandwidth, but comparing its bandwidth to Ethernet’s is silly, especially if a modern version of Ethernet is used, not one which is dog-years old.

Commodity Ethernet is 100 Mb/s these days, maybe even gigE, and 10 GB/s Ethernet is available. N2K over Ethernet is easily doable, but Ethernet won’t replace N2K any time soon because that requires a non-proprietary standard and that is certainly not easy.

George – Networks are measured in bits / sec (small b). N2K is 240kb, or 240,000 bits / second, about 30KB, 30,000 bytes / sec.

As Norse says, 100Mb ethernet is a commodity item (i.e., very inexpensive) and Gigabit ethernet (a billion bits / sec) is standard on new computers.

Furuno’s NavNet is 100Mb, a little more than 400 times faster than N2K.

Ben: It sounds like you don’t know the answer to my questions #1-#4. There must be some N2K expert out there who does know. Please?

Russ, I’ll give it a shot. If I’m incomprehensible please excuse me, I’m typing this on a night watch on one of those rubberized keyboards — almost as bad as an iPhone!

Q1) Where can we find out the size and priority of each PGN?

A1) To get a complete list, buy the N2K standard. Otherwise run one of the analyzers available.

A2) How often does a given device transmit each PGN?

A2) See #1.

Note that the standard can be deviated from. Some devices, such as Airmar, can be told to transmit at a different rate (per PGN).

Q3) Since apparently PGNs have priorities, how are they factored into the network performance? Does a device look at network load before deciding to send a PGN of a given priority? How do these priorities get applied in practice?

A3) CANbus is extremely clever in this respect, and one of the reasons it doesn’t run any faster than it does. The timing on all CANbus systems is such that the transmission time along the bus is less than it takes to transmit a single bit. More than one sender can start transmitting at the same time. As long as the bits in the header (prio, device/vendor name, device id) are the same then all senders keep sending. Since the header is by definition unique across the bus there is always a point where one device sends a ‘one’ and some other device a ‘zero’. At that point the sender that sent a zero ‘loses’. It detects that the bus contains the ‘wrong’ state (as it is designed such that a single one wins) and stops transmitting.

In this way there is no loss of bandwidth even on a busy (even a 99% loaded) system. Also is guaranteed that a higher prio message always transmits before a lower prio message.

4) What happens when the network maxes out? Due to error recovery, some networks effectively lose bandwidth as they become overloaded. What is N2K’s failure mode?

A4) It just maxes out. As the bus saturates lower prio messages will not make it e.g. be dropped. Bandwidth efficiency of CAN is 100%.

The failure mode is that a badly designed node will send out too many high prio messages never giving anyone else a chance. In this sense CAN implements ‘cooperative’ sharing of the bus.

Thanks, Kees, but I have heard that there are cetain situations in which a highly loaded NMEA 2000 network can experience cascading failure. I think they’re exceedingly rare, but I would like to know more about why and how they happen.

Russ, you forgot to mention that the CANbus chips used in NMEA 2000 devices are also a commodity item. So is the rugged, waterproof, heavily shielded DeviceNet plug and cable system that NMEA specified for the N2K physical layer. Unfortunately, there doesn’t seem to be a standard for waterproof Ethernet plugs, and maybe not for “outdoor” Ethernet cable either.

Kees: If I understand you, you’re saying that network load planning cannot be done with documents in the public domain. Obviously the analyzer can’t be used as a planning tool, it’s after the fact. Anyone who wants to do load planning for an N2K network needs to buy the standard?

I recall that about two years ago Furuno told me that they PGNs used by their MFD was proprietary information.

What is with this shroud of secrecy on PGNs? Isn’t it reasonable that someone should be able to do network load planning with information in the public domain?

It appears that Ben’s original posting has opened a door which allowed only a sliver of light into a very dark room.

Ben: While there is no standard for waterproof Ethernet plugs, there are plenty of available choices from Molex or Amphenol that meet the mil-spec ethernet connector requirements. All it would take for such a connector to be standard in marine applications is for the manufacturers to agree on one. But as we know, they aren’t even capable of agreeing to use the “standard” and “commodity” DeviceNet connectors.

Happy Easter to all those who celebrate it.

norse: I think my comment about Ethernet never being able to reach anywhere near its 100% maximum, was valid because I wanted to stress that even though CAN bus has a much lower bandwidth, it can use all 100% of its theoretical bandwidth. I just wanted readers to appreciate that distinction.

On the whole most manufacturer proprietary PGNs that are of any interest to the general user should be used as a stop gap when a standard PGN is not defined to handle the required data values. Once the NMEA create a new PGN to handle the data, manufacturer’s should move over to the new standard PGN. There will always be some proprietary PGNs that remain, but the hope is that these are only used between a manufacturer’s product and their own software for configuration or reprogramming purposes and so their details are not of any interest to the general user, nor are they sent periodically.

Now that some of the dust has settled, I’ll make a few comments from a “Furuno Point of View”…

1. We believe in smaller discrete NMEA2000 Networks! This is a safety issue that no one else seems to care about with respect to single backbone NMEA2000 networks.

Let me make this statement right now:

NMEA2000 in a Single Backbone Configuration is DANGEROUS!!!!!!!!!!!

Indeed, when we first conceived this new networking standard in the NMEA Standards Committee 17 years ago (originally called “NMEA0193” ;-), some of the main concentration was on the ability to have gateways, or other means to separate networks because of safety concerns. After all, these networks were going to be installed on boats and hidden away as opposed to a factory floor where every CAN node is exposed.

In fact, here is a link to the NMEA’s own www site to a paper written by the true “FATHERS” of NMEA2000 in 2002:

http://www.nmea.org/Assets/nmea-2000-digital-interface-white-paper.pdf

NOWHERE in this paper is a reference to a single NMEA2000 Backbone for any vessel. WHY? Because it is dangerous to have every critical navigation sensor wired to the same, unfused, 12VDC Copper wire loop with a single, or even multiple power supplies! Who will argue that it is prudent to wire every critical navigation sensor on a vessel to the same breaker, or connect them all with the same unfused copper wire? This is in effect what many manufacturers and even the NMEA is promoting.

Recently, even the NMEA Standards Committee can’t seem to understand this simple fact. I believe that this is largely because it is more expensive to create a system like Navnet 3D where each display has it’s own NMEA2000 Backbone and acts as a Gateway/Router to other NMEA2000 Networks on other MFDs or other parts of the vessel. This design philosophy IS THE ORIGINAL INTENT OF NMEA2000!!!!!!!! And, not a single backbone network where one device in the bilge can bring down an antenna on the mast along with everything else! Furthermore, when this does happen which it will, I defy anyone with any tool to quickly troubleshoot and isolate the problem with a single backbone system! This is the reason why Ethernet migrated from a Ring/BUS Network Topology, to a STAR Based Toplogy. Anyone can check lights or unplug segments to isolate a problem on a star network.

Ben may choose to mention the IEC’s (61162-3)opinion on NMEA2000 as well…

2. A lot has been mentioned about our FI50 Instrument Daisy Chaining capability and how “Dangerous” this practice is but, no one is talking about this single backbone issue. With smaller, discrete networks, daisy-chaining is NOT a problem, saving money, installation time, and has fewer connection points/cables.

3. Yes, the current software revision in our Navnet 3D MFDs does check the status of the connected NMEA2000 components often. This is because NN3D has an automatic back-up switchover capability. In the event that a NMEA2000/NMEA0183 connected to a MFD fails, it will automatically switch over to another device on the same or separate MFD and pass it over the entire network. This is a safety feature above and beyond the simple separation of NMEA2000/NMEA0183 Networks.

The above said, we do want to be good “Network Neighbors” and are working to reduce our NMEA2000 Bandwidth on a future software revision which has been mentioned here and will be released this Summer.

Until that time, we apologize if our GPS, Radar, Depth, or Wind sensors interfere slightly with the temp sensor in the icebox or the networked lightswitch in the Crapper!

Furuno Tech:

I appreciate your contribution here and this is good information. However, I recently discovered a feature of your N2K network bridging that I have an issue with: When the MFD (MFDBB in my case) forwards data from remote sensors (connected to the DRS), rather than passing the N2K sentence directly, the sentence is rewritten so that the remote sensor’s data appears to originate with the MFD. In other words, the original device ID is replaced by the MFD’s device ID.

I understand that with discrete N2K backbones if you didn’t do this you would have address collisions. However your approach prevents direct addressing of devices on the remote bus by, say, a configuration tool. In addition, because the MFD is prioritizing redundant data coming from different devices, I’m losing some flexibility regarding how my non-MFD displays handle data from those remote N2K backbones.

It seems to me that you could come up with a smart way of rewriting addresses so all devices on remote N2K buses could appear on the main bus with distinct device IDs.

Would appreciate your thoughts.

/afb

I too appreciate your comment, Furuno Tech, but I think you leave a lot to argue about…

* The multi-NMEA-2000-networks diagram in the white paper you linked to is clearly on a ship size system. It also apparently uses direct N2K-to-Ethernet gateways, which might be valuable devices, but don’t yet exist. Turning a boat’s N2K sensor data over to a single manufacturer’s Ethernet network is not the same thing in my view. (See Adam’s comment for one of several reasons why.)

* What’s more, the paper pretty clearly does reference a single backbone, right in the introduction: “Besides the greater amount of control and integration provided, NMEA 2000 replaces with a single cable all of the wiring of up to 50 NMEA 0183 interconnections and can handle the data content of between 50 and 100 NMEA 0183 data streams.”

* I’ve yet to hear of a specific instance where an entire N2K network experienced a complete electrical failure. I also don’t understand what you mean by “unfused”, given the power tap overload protection requirements. I’m quite willing to write about such a failure on Panbo if I receive good documentation. I’m also willing to do a lab experiment if that’s possible without destroying gear.

* I have heard a few vague stories about large N2K network data problems, and at least one involved Furuno MFDs. And, frankly, I don’t understand why so much bandwidth has to be used so that you can “check the status of the connected NMEA2000 components often”. I’ve seen all sorts of N2K displays roll over to redundant sensors when the original source is removed or incapacitated. They also typically see a new sensor added “hot” to the network quickly. Heck, the Navico MFDs can watch multiple GPS sensors and use the one with the highest accuracy calculation, without asking for special product ID transmissions.

* At first, I was enthusiastic about the possibility of transporting N2K data from an antenna mast via NavNet3D Ethernet. But I got uneasy when I realized that the feature apparently doesn’t include all N2K data, and that the power architecture meant a boat might have to keep a radar on standby just to keep a GPS powered up.

* Bottom line: I’ve been around long enough to value redundancy highly, but I want it to be independent of any one manufacturer if possible. And it does seem possible, especially with NMEA 2000. In fact, N2K sensor and display redundancy is plain easy. The harder part is N2K network redundancy. As noted, I haven’t seen evidence that they fail very often, but everything fails. The solutions that appeal to me are independent critical navigation appliances — like a laptop & GPS — or small backup N2K backbones ready to go in an emergency. More could be done. For instance, I’d like to see standardized gateways that let independent N2K backbones share data with eachother and/or Ethernet in such a way that no one network could be brought down by the other.

Ben,

First, The paper does not necessarily represent a ship-sized system. Any boat with more than one display or sensor installed for redundancy purposes should consider single point failure potential of a Single Backbone Bus. A single backbone simply does not provide this protection.

Your suggestion of small backup N2K backbones for redundancy is EXACTLY what Navnet 3D provides WITHOUT resorting to a separate backup system yet, you appear to be arguing against your own suggestion which puzzles me!

Second, “Power Tap Overload Protection?” – Yes, this is the single fuse for the entire BUS! Often the BUS will be fused much, much higher than any one individual component could possibly consume. For example, Take a single backbone NMEA2000 Bus that is current limited(via a Power Tap) at 5 amps and normally consumes 2 amps. If a NMEA2000 Sensor on this BUS fails in the form of a short circuit, it could still pull over 30 watts until is melts and falls off or starts a fire. My point is that every “T” on the bus is hardwired! Following the smoke trail will be the only way to find the problem even if it doesn’t start a fire. If the failure is not catastrophic and the fuse blows or power supply shuts down, disconnecting components and connections will be the only way to isolate the problem. I have already seen this in practice. This scenario is not “Far Fetched” considering NMEA2000 Buses that stretch from the Bilge to the top of the mast. And, it is exactly what I mean by “Unfused”. You should be able to simulate it without too much trouble in your lab.

Third, until now, we haven’t been requested to pass other specific PGNs through the Navnet 3D Gateway and yes, we do need to be NMEA2000 spectrally efficient as I have admitted and will achieve. That said, we do have some overhead and can consider specific requests. You are also correct that a universal NMEA2000 Gateway to Ethernet doesn’t exist. More importantly, without support for multiple backbones as Furuno provides through a dedicated MFD network, it would suffer from exactly the same single point failure potential.

Ben, well put. Your comment about having to keep the radar *and* MFD powered up is another reason I am going to disconnect my Airmar PB200 from the mast-top network bridged via Furuno’s ethernet and instead connect it directly to the primary N2K bus. I can definitely imagine situations (at anchor being the obvious one) in which I don’t want the noisy, power-hungry MFDBB running but still want the wind and heading data from the PB200 to be available. I’ll keep the SC30 connected to the DRS since the sat compass’s main purpose is correction of the sounder data and primary heading for the radar.

Why in the world would they pick the N2K network to share XM/weather data? That’s a whole lot of traffic that belongs to a faster network. i.e. ethernet.

Israel, XM Weather looks like a lot of information but the data is highly compressed and optimized because XM bandwidth is wicked scarce. I think Garmin decided to use N2K to carry XM because it makes for an easy install and it will work with even their non-Ethernet products. I have a theory on another reason, but I’ll save that for an entry I’m planning on well XM looks on Garmin MFDs these days.

I did ask Garmin if they have any concerns about the bandwidth XM is using and they say they do not, because of the prioritization explained above, and how little data it actually involves. In fact, they’ve discontinued the GDL 30/30a XM receiver (though there still some in the pipeline, and they work with all Garmin Marine Net MFDs). The GXM 51 will be the only Garmin marine XM receiver going forward.

There seems to be a lot of confusion, mis-information and debate concerning NMEA 2000. This is unfortunate but understandable given that it is a proprietary, closed “standard”.

NMEA 2000 is derived from CANbus however, which is extensively documented. One excellent and free learning resource that I have found for CAN is

http://www.vector-elearning.com/vl_einfuehrungcan_portal_en,,378422.html

NMEA 2000 is an open standard since anyone can use it. It’s only a proprietary standard if you think all open standards must be free.

If you do think that any open standard which charges for documentation and/or certification is proprietary, then how do you differeniate them from truly proprietary standards, like, for instance, the various broadband Ethernet-based networks developed by Garmin, Furuno, Navico, and Raymarine?

Are you really willing to say that those protocols and NMEA 2000 are equally unavailable?

Ben, its is is proprietary, its owned by NMEA, its not open in the conventional IT sense of the term.

its a bit late for my comments, but The NMEA/canbus prioritization system has drawbacks

firstly its not dynamic, it can not be changed on a system by system basis

Secondly, it only works if you need the priority order as set out by the NMEA PGN’s. It may be that you need a lower order PGN to be higher priority in your network and that cant be done

As the proliferation of Private PGNS has grown enormously and is likely to conrtinue growing as producers try to enhance their offerings over their competitors, the priority schema will become more of a problem.

Canbus was designed for a closed , single design environment, where no user added equiopment existed, The basic system doesnt even support device addressing !!. Address claim, fast packet transfers, Information request, protocols etc all had to be grafted on to the basic spec to try and address a user modifiable network.

I’m a fan of canbus, but not on boats where users install equipment and more importantly have to diagnose and fix faults themselves. In this case Canbus is very unsuitable.

So, Dave, then AIS is also a proprietary protocol? And ABYC marine wiring standards? And all the other marine standards created by standard setting organizations like the ITU and IEC?

But, wait, you also want to free manufacturers to set standard PGN priorities on their own, instead of in the open, consensual, everyone-welcome-to-participate way the NMEA does it?

Also, did you not read Andy Campbell’s well informed description of how Proprietary PGNs work?

“Manufacturer Proprietary PGN messages have the lowest possible priority on the NMEA 2000 bus, so regardless of how many are sent by a single node, they can only ever block other Proprietary messages. All standard PGN’s have a higher priority and will get through long before these Proprietary messages are allowed on the bus.”

Geez dave, isn’t that what NMEA did, “grafted on to the basic spec a user modifiable network”

When I can plug two sensors, a display, and an MFD into a backbone in 10 minutes and have both the display and MFD show me the data, and in addition have no single device as a single point of failure and connectors that can’t pull lose, I have to say this is a big big leap over NMEA-0183 and as well a leap over my USB desktop hub for that matter that has two single points of failure and won’t allow me to share USB devices between computers like NMEA-2000 allows me to have multiple MFD’s and displays.

So, Why Po Po Canbus in this way? You just trying to craft an argument without using the “e” word?

By the way, hats off to all my fellow ethernet crazy’s that are either seeing the light, or at a minimum refraining from joining the frey here and keeping it on the forum instead.

I have worked with XM data before and saw the specs for the data that the Garmin device provides and still don’t see N2K as the right network to share the data on. Even if they compressed the data, it would have to be a new extremely efficient algorithm to make sense in terms of N2K messages (IMHO).

Even if N2K proprietary messages are ranked so they have the lowest priority on the CAN network, adding much traffic could still affect other devices because those messages will be eventually transmitted and chances are that other devices need to parse the message… and at the very least determine that they don’t care about it.

This has to do somehow with the fact that NMEA tries to control how manufacturers request for a product information… because it is a very heavy message and so can create lots of traffic.

There were a couple specific problems that we found that led us away from N2K for motor control. We still use CANbus but we have four private CAN networks to keep congestion down and cabling under control.

The first issue has to do with wiring when you need long branches from your main NMEA backbone. We found significant reflections if the side lobes to the network are more than a quarter wavelength. Bear in mind that the underlying CAN network was designed for the distances inside an automobile. A couple of our installations have needed 150-200 feet from end to end.

Second, a lone transmitter with no receiver on the N2K bus can crash the bus in such a way that the receivers can never get on the bus without shutting down the network first. We found this with a particular N2K generator interface.

Third, the bus speed and congestion can be a problem for a real time application. In our case it was throttle control. We found that data bursts could make very unstable response to throttle movement.

Last, we have found that the NMEA cabling has proven less robust than our (vastly cheaper) Ethernet cabling. We’ve had to debug quite a few problems that were due to cabling. Most of our cables were the expensive ones that you can find at the marine stores.

I hope you didn’t understand me in that I certainly never meant to imply that safety concerns are not important. I simply think that the NMEA certification process provides a false sense of security and that the N2K bus, especially a large multi-vendor bus, is not as safe as I’d like to see in a mission critical application. Networks can certainly be designed for safe operation.

The higher layer protocols, which are really the only part an “app” is likely to see are entirely separate from these issues. Also, CANbus has certainly been implemented in many ways including twisted pair, DCbus and MODbus. None of this, incidentally is expensive NMEA intellectual property, just misguided implementation.

– Bill